Let’s create fully dockerized nodejs backend application with: express js, typescript, typeorm and mysql

Welcome to this tutorial on creating a modern REST API with Node.js, TypeScript, TypeORM, and MySQL. In this tutorial, we will be building a REST API called “Bookie” that allows users to create, read, update, and delete books from a MySQL database.

We will be using Node.js as the runtime environment for our API, and we will be using TypeScript to write our code in a statically-typed style. To connect to the MySQL database and perform database operations, we will be using the TypeORM library, which provides a simple and powerful API for interacting with a variety of databases.

Throughout this tutorial, we will be building and testing the API step by step, starting with setting up the development environment and ending with a fully-functioning REST API that can be used to manage books in a MySQL database.

So let’s get started!

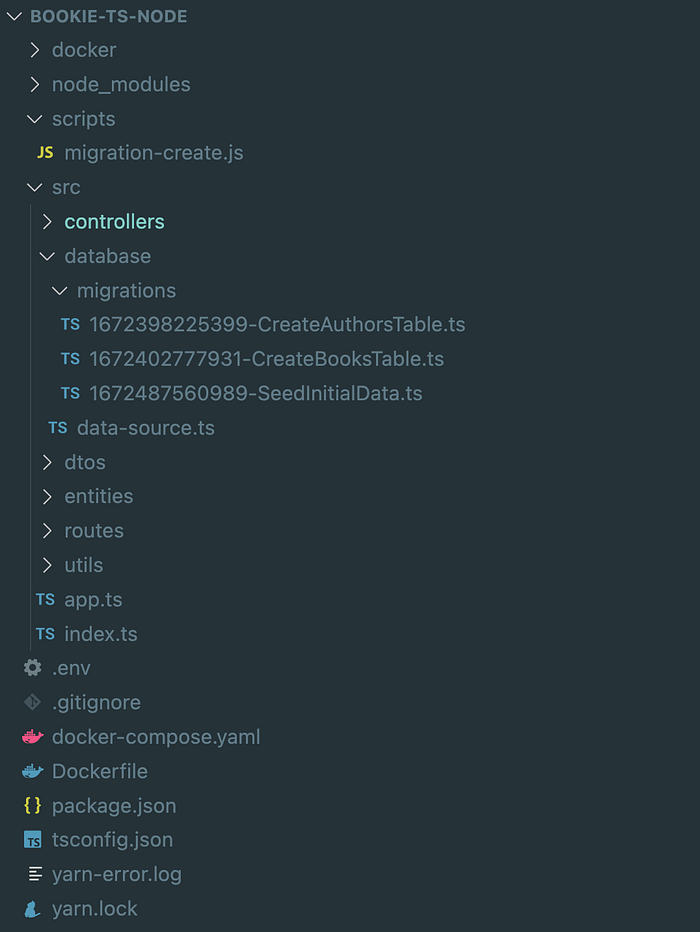

Folder structure

Initial Steps

Create a new directory for your project and navigate to it.

mkdir bookie-ts-node

cd bookie-ts-nodeCreate a Dockerfile in the root of your project to define the Docker image for your Node.js app:

FROM node:19-alpine

# Create app directory

WORKDIR /app

# Install app dependencies

COPY package.json yarn.lock ./

RUN yarn install

RUN yarn global add ts-node

# Copy source files

COPY . .Now create another file: docker-compose.yaml with following contents:

version: '3'

networks:

bookie:

services:

mysql:

image: mysql:8

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_DATABASE: bookie

MYSQL_USER: bookie

MYSQL_PASSWORD: bookie

volumes:

- ./docker/mysql:/var/lib/mysql

networks:

- bookie

ports:

- "3308:3306"

app:

build:

context: .

dockerfile: Dockerfile

volumes:

- ./:/app

environment:

NODE_ENV: development

DB_HOST: mysql

DB_USERNAME: bookie

DB_PASSWORD: bookie

DB_DATABASE: bookie

depends_on:

- mysql

command: yarn dev

ports:

- "3000:3000"

networks:

- bookie

volumes:

mysql:Since the Dockerfile has the scripts to copy package.json & yarn.lock, we will create empty files which later will be replaced:

# Create empty package.json and yarn.lock files

touch package.json yarn.lock

# Build the Docker image and start the container in detached mode

docker-compose up --build -d

# View the list of running containers

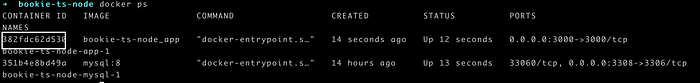

docker ps

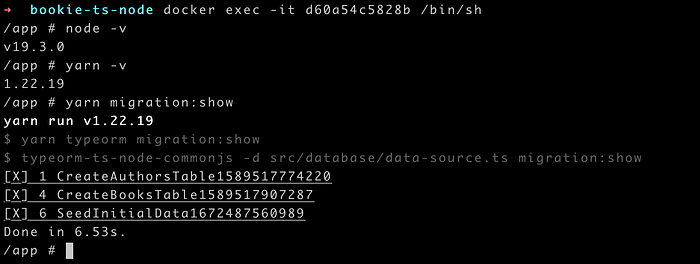

Now we need to get the container id for our app and we need to login into the container using the command: docker exec -it 382fdc62d530 /bin/sh

Now we can run the different commands inside this container as if we are on the local machine:

Please note if you don’t wish to work with docker, and if you already have the dependencies like: node, MySQL, yarn already installed on your machine you can simply follow the rest of the commands. Moving forward we will be running all of the commands from inside the container.

Initialize a new Node.js project by running yarn init -y. This will create a package.json file in your project directory.

Install the required dependencies by running:

yarn add express @types/express dotenv typeorm reflect-metadata mysql2 cors body-parser express-async-errorsInstall TypeScript and nodemon as a development dependency by running:

yarn add -D typescript ts-node tsconfig-paths @types/node nodemon @types/cors @types/body-parserCreate a tsconfig.json file by running npx tsc --init. This will create a default TypeScript configuration file. Please make sure following options are enabled:

"experimentalDecorators": true,

"strictPropertyInitialization": false, //set it to false

"emitDecoratorMetadata": true,

"noImplicitAny": false, Create a .env file in the root of your project to store environment variables touch .env :

APP_PORT=3000

DB_CONNECTION=mysql

DB_HOST="mysql"

DB_PORT=3306

DB_USERNAME=bookie

DB_PASSWORD=bookie

DB_DATABASE=bookie

DB_ENTITIES=src/entity/**/*.ts

DB_MIGRATIONS=src/migration/**/*.ts

DB_CLI_MIGRATIONS_DIR=src/migrationWe need to update our package.json to include the command for yarn dev, so our file looks like this:

{

"scripts": {

"build": "tsc",

"dev": "nodemon --watch src --exec 'ts-node' src/index.ts"

}

}Adding & running the migrations

There are some breaking changes with the latest version of typeorm, we need to fix a few things, previously it used to make use of ormconfig.ts file instead it uses data source. So let’s create a file: data-source.ts inside src/database directory and the contents of the file are:

import * as dotenv from "dotenv";

import { DataSource } from "typeorm";

dotenv.config();

export const AppDataSource = new DataSource({

type: "mysql",

host: process.env.DB_HOST || "127.0.0.1",

port: Number(process.env.DB_PORT) || 3306,

username: process.env.DB_USER || "user",

password: process.env.DB_PASSWORD || "root",

database: process.env.DB_DATABASE || "bookie",

migrations: ["src/database/migrations/*.{js,ts}"],

logging: process.env.ORM_LOGGING === "true",

entities: ["core/data/entity/**/*.{js,ts}"],

synchronize: false,

subscribers: [],

});We want to create migrations using the CLI, but the latest version of typeorm has some breaking changes, so we need to take advantage of the package: yargs . Create a folder called scripts on the root directory and create a file: migration-create.js with following contents:

#!/usr/bin/env node

const yargs = require("yargs");

const { execSync } = require("child_process");

// Parse the command-line arguments

const {

_: [name],

path,

} = yargs.argv;

// Construct the migration path

const migrationPath = `src/database/migrations/${name}`;

// Run the typeorm command

execSync(`typeorm migration:create ${migrationPath}`, { stdio: "inherit" });Now let’s update our package.json to include the scripts to create new migration, show the list of the migrations and revert it:

"scripts": {

"build": "tsc",

"dev": "nodemon --watch src --exec 'ts-node' src/index.ts",

"start": "node dist/index.js",

"typeorm": "typeorm-ts-node-commonjs -d src/database/data-source.ts",

"migration:show": "yarn typeorm migration:show",

"migration:create": "node scripts/migration-create.js",

"migration:revert": "yarn typeorm migration:revert"

}With this we can run the command: yarn migration:create CreateAuthorsTable to create a new migration file, which will have the following contents:

import { MigrationInterface, QueryRunner, Table } from "typeorm"

export class CreateAuthorsTable1589517774220 implements MigrationInterface {

public async up(queryRunner: QueryRunner): Promise<void> {

await queryRunner.createTable(

new Table({

name: "authors",

columns: [

{

name: "id",

type: "int",

isPrimary: true,

isGenerated: true,

generationStrategy: "increment",

},

{

name: "name",

type: "varchar",

length: "255",

isNullable: false,

},

{

name: "email",

type: "varchar",

length: "255",

isNullable: false,

},

],

}),

true

)

}

public async down(queryRunner: QueryRunner): Promise<void> {

await queryRunner.dropTable("authors")

}

}Let’s quickly create the books table as well: yarn migration:create CreateBooksTable

import { MigrationInterface, QueryRunner, Table, TableForeignKey } from "typeorm"

export class CreateBooksTable1589517907287 implements MigrationInterface {

public async up(queryRunner: QueryRunner): Promise<void> {

await queryRunner.createTable(

new Table({

name: "books",

columns: [

{

name: "id",

type: "int",

isPrimary: true,

isGenerated: true,

generationStrategy: "increment",

},

{

name: "title",

type: "varchar",

length: "255",

isNullable: false,

},

{

name: "description",

type: "text",

isNullable: false,

},

{

name: "authorId",

type: "int",

isNullable: false,

},

{

name: "price",

type: "int",

isNullable: false,

},

{

name: "category",

type: "varchar",

length: "255",

isNullable: false,

},

],

}),

true

)

await queryRunner.createForeignKey(

"books",

new TableForeignKey({

columnNames: ["author_id"],

referencedColumnNames: ["id"],

referencedTableName: "authors",

onDelete: "CASCADE",

})

)

}

public async down(queryRunner: QueryRunner): Promise<void> {

await queryRunner.dropTable("books")

}

}We want to seed some data to the tables, so we will be using migration for this purpose as well, so let’s create a migration file: yarn migration:create SeedInitialData and the contents of the migration file will be:

import { MigrationInterface, QueryRunner } from "typeorm";

export class SeedInitialData1672487560989 implements MigrationInterface {

public async up(queryRunner: QueryRunner): Promise<void> {

await queryRunner.query(

"INSERT IGNORE INTO authors (id, name, email) VALUES \n" +

" (1, 'John Smith', 'john@example.com'), \n" +

" (2, 'Jane Doe', 'jane@example.com')"

);

await queryRunner.query(

"INSERT IGNORE INTO books (id, title, description, price, authorId, category) VALUES \n" +

" (1, 'The Alchemist', 'A book about following your dreams', 10.99, 1, 'Fiction'), \n" +

" (2, 'The Subtle Art of Not Giving a F*ck', 'A book about learning to prioritize your values', 12.99, 2, 'Self-help')"

);

}

public async down(queryRunner: QueryRunner): Promise<void> {

await queryRunner.query(`DELETE FROM books`);

await queryRunner.query(`DELETE FROM authors`);

}

}Now, we need to run the migration to create the tables and seed the initial data, we can use the command migration:run command:

yarn typeorm migration:runAdding main logic to the REST API with routers & controllers

Now let’s jump into the main files, our main entry point is src/index.ts

import * as dotenv from "dotenv";

import "reflect-metadata";

import app from "./app";

import { AppDataSource } from "./database/data-source";

dotenv.config();

const PORT = process.env.APP_PORT || 3000;

AppDataSource.initialize()

.then(async () => {

console.log("Database connection success");

})

.catch((err) => console.log(err));

app.listen(PORT, () => {

console.log(`Server is running on port ${PORT}`);

});You can see we are importing a file `app.ts`, the contents for it should be:

import bodyParser from "body-parser";

import cors from "cors";

import express, { Express, NextFunction, Request, Response } from "express";

import "reflect-metadata";

import authorsRoutes from "./routes/author";

import booksRoutes from "./routes/book";

import { ErrorHandler } from "./utils/Errorhandler";

const app: Express = express();

app.use(cors());

app.use(bodyParser.json());

app.use("/authors", ErrorHandler.handleErrors(authorsRoutes));

app.use("/books", ErrorHandler.handleErrors(booksRoutes));

app.all("*", (req: Request, res: Response) => {

return res.status(404).send({

success: false,

message: "Invalid route",

});

});

// Define a middleware function to handle errors

app.use((err: any, req: Request, res: Response, next: NextFunction) => {

console.log(err);

return res.status(500).send({

success: false,

message: "Internal server error",

});

});

export default app;As we can We need to create a new file Errorhandler.ts inside the directory utils with the following content:

export class ErrorHandler {

static handleErrors(fn) {

return (req, res, next) => {

Promise.resolve(fn(req, res, next)).catch(next);

};

}

}Working with TypeORM Entities:

Now we need to create the entities for book & author, so let’s create two files inside the directory entities touch Book.ts && touch author.ts

import { Entity, PrimaryGeneratedColumn, Column, ManyToOne, OneToMany } from "typeorm"

@Entity()

export class Author {

@PrimaryGeneratedColumn()

id: number

@Column()

name: string

@Column()

email: string

@OneToMany(type => Book, book => book.author)

books: Book[]

}import { Column, Entity, ManyToOne, PrimaryGeneratedColumn } from "typeorm";

import { Author } from "./Author";

@Entity("books")

export class Book {

@PrimaryGeneratedColumn()

id: number;

@Column({ nullable: false })

title: string;

@Column()

description: string;

@ManyToOne((type) => Author, (author) => author.books, { eager: true })

author: Author;

@Column()

authorId: number;

@Column()

price: number;

@Column()

category: string;

}We will create a util class called ‘Response’ which will be used by the controllers to format the JSON response in a consistent way. This will help us avoid repeating code and make it easier to maintain our application. mkdir utils and touch Response.ts

import { Response } from "express";

export class ResponseUtil {

static sendResponse<T>(

res: Response,

data: T,

statusCode = 200

): Response<T> {

return res.status(statusCode).send({

success: true,

message: "Success",

data,

});

}

static sendError(

res: Response,

message: string,

statusCode = 500,

errors: any = null

): Response {

return res.status(statusCode).send({

success: false,

message,

errors,

});

}

}Now let’s update our author router file routes/author.ts:

import express from "express";

import { AuthorsController } from "../controllers/AuthorsController";

const authorsController = new AuthorsController();

const router = express.Router();

router.get("/", authorsController.getAuthors);

router.get("/:id", authorsController.getAuthor);

router.post("/", authorsController.createAuthor);

router.put("/:id", authorsController.updateAuthor);

router.delete("/:id", authorsController.deleteAuthor);

export default router;So our controllers/AuthorsController.ts looks like this:

import { Request, Response } from "express";

import { AppDataSource } from "./../database/data-source";

import { Author } from "./../entities/Author";

import { ResponseUtil } from "./../utils/Response";

export class AuthorsController {

async getAuthors(req: Request, res: Response): Promise<Response> {

const authors = await AppDataSource.getRepository(Author).find();

return ResponseUtil.sendResponse(res, authors, 200);

}

async getAuthor(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const author = await AppDataSource.getRepository(Author).findOneBy({

id: parseInt(req.params.id),

});

if (!author) {

return ResponseUtil.sendError(res, "Author not found", 404);

}

return ResponseUtil.sendResponse(res, author, 200);

}

async createAuthor(req: Request, res: Response, next): Promise<Response> {

const authorData = req.body;

const repo = AppDataSource.getRepository(Author);

const author = repo.create(authorData);

await repo.save(author);

return ResponseUtil.sendResponse(res, author, 201);

}

async updateAuthor(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const authorData = req.body;

const repo = AppDataSource.getRepository(Author);

const author = await repo.findOneBy({

id: parseInt(req.params.id),

});

if (!author) {

return ResponseUtil.sendError(res, "Author not found", 404);

}

repo.merge(author, authorData);

await repo.save(author);

return ResponseUtil.sendResponse(res, author);

}

async deleteAuthor(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const repo = AppDataSource.getRepository(Author);

const author = await repo.findOneBy({

id: parseInt(req.params.id),

});

if (!author) {

return ResponseUtil.sendError(res, "author not found", 404);

}

await repo.remove(author);

return ResponseUtil.sendResponse(res, null);

}

}Now, similarly our Books router would be:

import express from "express";

import { BooksController } from "../controllers/BooksController";

const booksController = new BooksController();

const router = express.Router();

router.get("/", booksController.getBooks);

router.get("/:id", booksController.getBook);

router.post("/", booksController.createBook);

router.put("/:id", booksController.updateBook);

router.delete("/:id", booksController.deleteBook);

export default router;So our BooksController would look like this:

import { Request, Response } from "express";

import { AppDataSource } from "./../database/data-source";

import { Book } from "./../entities/Book";

import { ResponseUtil } from "./../utils/Response";

export class BooksController {

async getBooks(req: Request, res: Response): Promise<Response> {

const books = await AppDataSource.getRepository(Book).find();

return ResponseUtil.sendResponse(res, books, 200);

}

async getBook(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const book = await AppDataSource.getRepository(Book).findOneBy({

id: parseInt(req.params.id),

});

if (!book) {

return ResponseUtil.sendError(res, "Book not found", 404);

}

return ResponseUtil.sendResponse(res, book, 200);

}

async createBook(req: Request, res: Response): Promise<Response> {

const bookData = req.body;

const repo = AppDataSource.getRepository(Book);

const book = repo.create(bookData);

await repo.save(book);

return ResponseUtil.sendResponse(res, book, 201);

}

async updateBook(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const bookData = req.body;

const repo = AppDataSource.getRepository(Book);

const book = await repo.findOneBy({

id: parseInt(req.params.id),

});

if (!book) {

return ResponseUtil.sendError(res, "Book not found", 404);

}

repo.merge(book, bookData);

await repo.save(book);

return ResponseUtil.sendResponse(res, book);

}

async deleteBook(req: Request, res: Response): Promise<Response> {

const { id } = req.params;

const repo = AppDataSource.getRepository(Book);

const book = await repo.findOneBy({

id: parseInt(req.params.id),

});

if (!book) {

return ResponseUtil.sendError(res, "book not found", 404);

}

await repo.remove(book);

return ResponseUtil.sendResponse(res, null);

}

}Running the server

Use the command yarn dev and boot the dev server

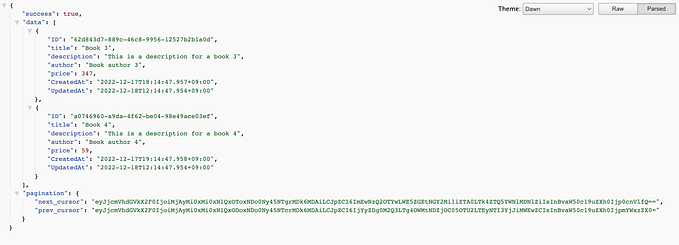

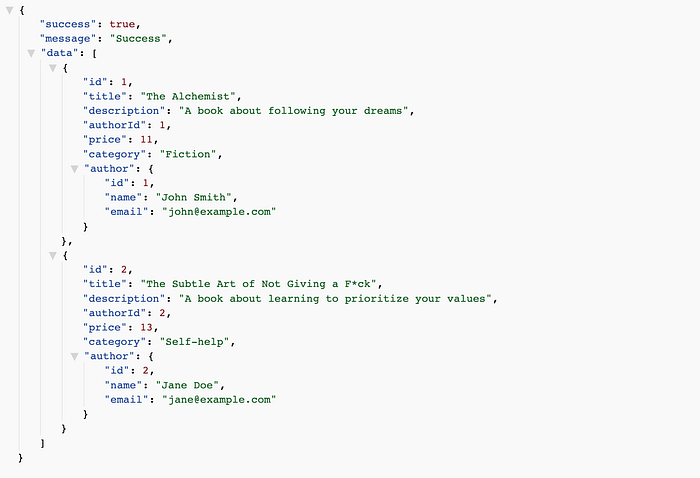

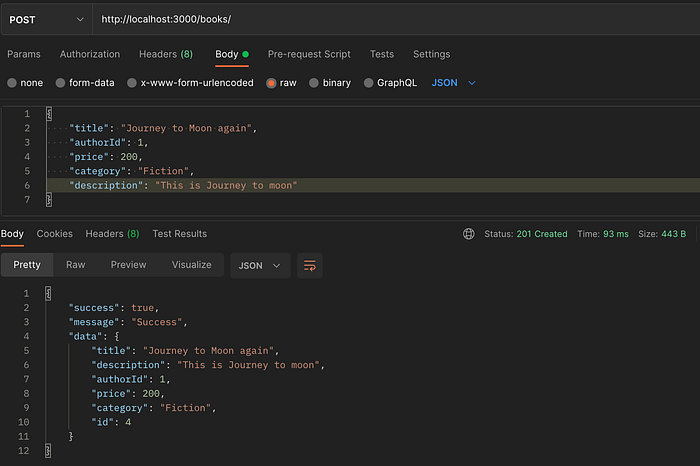

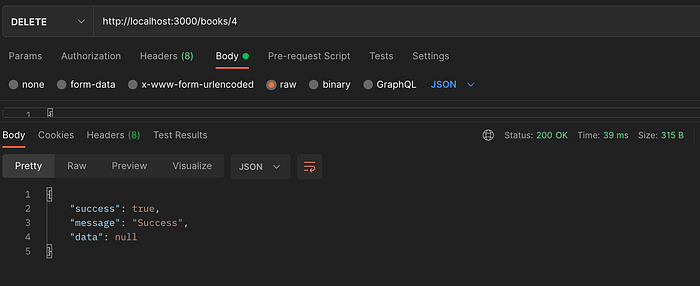

Verifying the contents with POSTMAN

Now we can test these endpoints using postman / browser:

Good job, one thing that we are missing at the moment is making use of the validations. To validate the provided input, we will be using a popular library called ‘class-validator’, which provides a simple and powerful way to validate objects in TypeScript. By using class-validator, we can define validation rules for our entities and ensure that only valid data is accepted by the API.

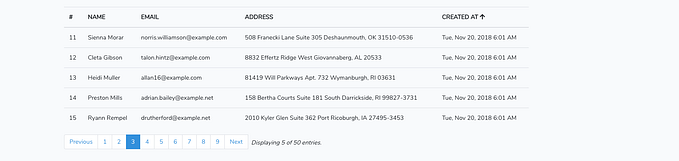

Validating the input data using DTO

Using Data Transfer Objects (DTOs) can be a good way to validate request data at the topmost level of your application.

A DTO is a class that defines the shape of the request data, and you can use it to validate the data before it is passed to the repository or service layer. This can help you ensure that the data is in a consistent and expected format, and it can also help you avoid having to perform multiple validation checks at different levels of your application. We will use class-validator library to achieve the validation, firstly let’s install the validator: yarn add class-validator and now create a directory called DTOs and inside it let’s create a folder called: CreateBookDTO and the contents of the DTO are:

import { IsNotEmpty, IsInt } from "class-validator"

export class CreateBookDTO {

@IsNotEmpty()

title: string

@IsNotEmpty()

description: string

@IsInt()

authorId: number

@IsInt()

price: number

@IsNotEmpty()

category: string

}Now, we need to update the CreateBook method to perform the validations as well:

async createBook(req: Request, res: Response): Promise<Response<Book>> {

const bookData = req.body;

const dto = new CreateBookDTO();

Object.assign(dto, bookData);

const errors = await validate(dto);

if (errors.length > 0) {

return ResponseUtil.sendError(res, "Invalid data", 400, errors);

}

const repo = AppDataSource.getRepository(Book);

const book = repo.create(bookData);

await repo.save(book);

return ResponseUtil.sendResponse(res, book, 201);

}So if we try to pass the invalid data while creating the book:

Similarly you can make changes on the other methods creating a DTO accordingly.

Conclusion

In this tutorial, we learned how to implement a REST API with Node.js, Express, TypeORM, TypeScript, and MySQL. We started by setting up a development environment with Docker, and then we created a new Node.js project with TypeScript. We then installed the required dependencies, including Express, TypeORM, and the MySQL driver.

Next, we configured TypeORM to connect to a MySQL database and defined our entity models. We then implemented the API routes and controllers using the Express router and decorated them with the TypeScript decorators provided by TypeORM.

Finally, we tested the API using a tool like Postman to make sure it was working as expected.

Overall, this tutorial demonstrated how to use Node.js, Express, TypeORM, TypeScript, and MySQL to build a REST API that can be used to create, read, update, and delete data in a MySQL database.

Link to GitHub repository / source code: https://github.com/sadhakbj/Bookie-NodeJs-Typescript